Templates Community /

Gated Recurrent Unit

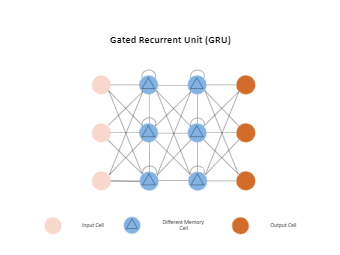

Gated Recurrent Unit

Kiraaaa

Published on 2021-06-11

Gated Recurrent Units (GRUs) are a gating mechanism in recurrent neural networks. GRU’s are used to solve the vanishing gradient problem of a standard RNU. Basically, these are two vectors that decide what information should be passed to the output. As the below Gated Recurrent Unit template suggests, GRUs can be considered a variation of the long short-term memory unit because both have a similar design and produce similar results in some cases. The update gate controls the information that flows into memory, and the reset gate controls the information that flows out of memory. The update gate and reset gate are two vectors that decide which information will pass to the output.

Tag

biology

Share

Report

0

444

Post

Recommended Templates

Loading